I have a new paper on example-based mesh deformation appearing in SIGGRAPH Asia this year. You can access the full paper, entitled “Fast and Reliable Example-Based Mesh IK for Stylized Deformations” and some videos here, but I’ll give a quick non-technical overview of the method in this post.

The goal of this project was to create a create a technique for example-based inverse-kinematic mesh manipulation which works reliably and in real-time across a wide range of cases. That’s a bit of a mouthful, so I’ll start by describing the idea from the beginning.

Let’s say you have some shape that you want to animate. To make things concrete, let’s say you want to animate this snake:

If you want the maximum amount of control and don’t care if creating the animation takes forever, there are plenty of tools available to do this by hand-drawing each frame, or keyframing, or some related technique. But if you want to create the animation interactively, for instance by clicking and dragging parts of the snake in real time, then your options are a bit more limited.

One common approach is to treat this problem as an elastic deformation subject to point constraints. That is, you pre-specify a few points on the snake that you’re going to let the user move around. For instance you could add one point on the head and another on the end of the tail. Then the user can move the head and tail wherever they like in real-time, and the algorithm will automatically figure out how the rest of the snake should bend. Of course, for any given placement of the snake’s head and tail, there are lots of ways the rest of the snake could bend, most of which look like complete nonsense. So instead you have the algorithm solve for a way that the snake can bend as smoothly as possible.

If you try this out, you’ll get something like this:

I think that’s actually pretty neat, but as you can see it still leaves a lot to be desired. The problem is that although there are a number of different ways that you can quantify what it means for the snake to bend “as smoothly as possible”, there’s still a limited amount of artistic control. This shows through in the animations, where everything tends to look like it was cut out of a sheet of rubber.

To add artistic control, this paper takes the approach of what is called example-based deformation. The idea (which we didn’t invent) is that instead of giving a single shape for the snake, the artist would provide multiple shapes:

There’s a lot of extra information contained in these images, and you can use that to make the snake bend more realistically. Here’s how the result looks with our method:

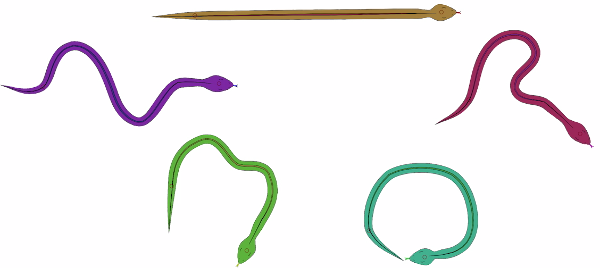

To make what’s going on here a bit more obvious, it helps to show this example with each of the input images drawn in a different color. This will make it easier to visualize which of these inputs if being used at each point in the resulting animation:

Below you’ll see the same snake animation as before, but now the color will show the degree to which each of the input shapes is most influencing the deformation. So when the snake in the animation is drawn in purple, then it’s using the purple input image as the reference for the deformation. Of course, most of the time the algorithm will be using a combination of multiple input shapes at once, in which case the animation is rendered by respectively blending between the colors of the corresponding input shapes:

This all seems well and good, but it turns out that there’s a devil in the details of getting this to “just work” for a wide range of different input shapes, and to run fast enough to be used in real-time applications. That’s what this paper is aimed at addressing.

There are three components of our method that help it work well. Firstly, we define a new form of the example-based elastic energy governing how the shape stretches, bends, and how it utilizes the input examples. Previous methods for this use energies which intertwine issues of how the shape deforms when you factor out the aspect dealing with multiple examples, and how the different examples are utilized. This makes it difficult to control the trade-offs between these two aspects, and leads to a range of different artifacts depending on the specifics of how things are implemented. Our solution to this is essentially to formulate the energy so it acts both as a typical elastic energy with regards to the shape’s deformation, and as a scattered data interpolation technique with respect to how the different example inputs are used and combined. It turns out that this works pretty well, and gives you more control over the deformation and the way the examples are used (although we only deeply explore one of the many possible variations in this paper).

In addition, we use an example-based linear subspace reduction, inspired by a similar approach used in the Fast Automatic Skinning Transformations algorithm to get real-time performance. Finally, both the example-based elastic energy and the subspace reduction are done in a way which lets the examples by used in a spatially localized manner, rather than being globally constant across the entire shape. In the above snake animation you can see this on some frames where the snake’s color changes between its head and its tail. This ends up being particularly useful in getting good results when applied to articulated characters where you move the arms/legs around since it lets the different limbs utilize different input examples.

All in all there’s a little bit more work I want to do with related to this method, but what we have so far works pretty well for a bunch of different situations, both in 2D and in 3D. You can see a few more examples below:

I’ll probably write some more about this work later, hopefully expanding on what we had space to actually include in the paper itself, but for now you can get further, and substantially more detailed infoinformation, at the project website.